Researchers develop a simple and effective generative adversarial network known as SphereGAN for unsupervised image generation

SEOUL, South Korea, June 7, 2022 /PRNewswire/ — Deep neural networks are popularly used for object recognition, detection, and segmentation across different avenues. Of these, generative adversarial networks (GANs) are a superior class of neural networks whose performance exceeds that of conventional neural networks. They are meant to minimize the inconsistencies between real and fake data, and have proven successful for image detection, medical imaging, video prediction, 3D image reconstruction, and more.

Despite their growth over the last few years, they are not devoid of limitations. Training conventional GANs is difficult and involves very high computational costs, making them unreliable for complex computer vision problems. In addition, the data generated by them lacks diversity and appears unrealistic. Thus, it is not surprising that many GANs are unstable and can only handle simple data sets.

To overcome these limitations, a team of researchers including PhD student Sung Woo Park and Professor Junseok Kwon from Chung-Ang University in Seoul conducted a study with a simple solution. This study, published in IEEE Transactions on Pattern Analysis and Machine Intelligence, was made available online on August 12, 2020, and was published in Volume 44 Issue 3 of the journal on March 1, 2022.

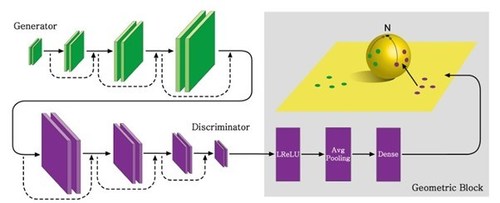

In this study, the team proposed a simple and effective integral probability metric (IPM) based GAN known as ‘SphereGAN’. SphereGAN uses multiple geometric moments to examine the inconsistencies between real and fake data distributions on the surface of a ‘hypersphere’. A hypersphere is a sphere with multiple dimensions which belongs to a branch of mathematics known as Riemannian geometry. "Compared to the trends of conventional generative models, we tried to bridge machine learning with the well-known sub-area of mathematics known as ‘Riemannian geometry‘, since it has many positive implications for mathematical data," said Prof. Kwon, explaining their motivation behind this unique approach.

They found that by incorporating Riemannian geometry in this model, SphereGAN demonstrated a considerably improved performance as compared to conventional GANs.

For starters, it could be mathematically verified, was found to undergo stable training, and was successful in generating realistic images. Moreover, it displayed superior mathematical properties which eliminated the need for additional processes such as virtual data sampling—a necessary requirement for conventional GANs. SphereGAN also used multiple geometric moments, which improved its accuracy in measuring distances—an important aspect of image generation.

On testing this model’s efficacy for unsupervised 2D image generation and 3D point cloud generation, the team observed that the resultant 2D images and 3D point clouds were more realistic than those produced by conventional GANs, regardless of the domain of the dataset.

What are the long-term implications of using SphereGAN? "GANs have been employed for numerous applications the industrial fields, such as the generation of ‘deep fake’ images," explains Prof. Kwon. "The difference between these fake images and real ones cannot be distinguished by human perception. In the coming years, these and more advanced image generation applications will be possible with a robust model like SphereGAN".

Looks like SphereGAN will truly revolutionize imaging applications for multiple industrial and research fields in the coming years!

Reference

Title of original paper: SphereGAN: Sphere Generative Adversarial Network Based on Geometric Moment Matching and its Applications

Journal: IEEE Transactions on Pattern Analysis and Machine Intelligence

DOI: https://doi.org/10.1109/TPAMI.2020.3015948

About Chung-Ang University

Website: https://neweng.cau.ac.kr/index.do

Contact:

Seong-Kee Shin

02-820-6614

337988@email4pr.com

SOURCE Chung-Ang University